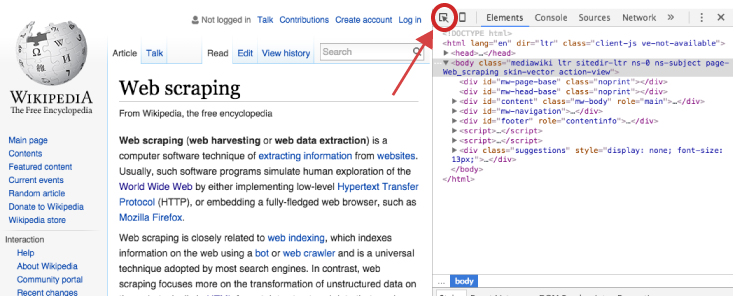

Then you will get the data and extract other content by adding new columns. Just select some text in a table or a list, right-click on the selected text, and choose 'Scrape Similar' from the browser menu. StartUrls examplesĪlmost any url from reddit will return a result. The scraper is another easy-to-use screen web scraper that can easily extract data from an online table, and upload the result to Google Docs. With the sitemaps, you can easily navigate the site the way you want and the data can be later exported as a. Using the extension you can create and test a sitemap to see how the website should be traversed and what data should be extracted.

#Webscraper with r free#

If the maxItems is less than maxPostCount, the number of posts will be equal the maxItems. Web scraper, a standalone chrome extension, is a free and easy tool for extracting data from web pages. When searching for Posts, you can set maxItems to the same number as maxPostCount since each post is saved as an item in the dataset. As an example, if you set maxCommunitiesAndUsers to 10 and each community has 4 categories, you will have to set maxItems to at least 40 (10 x 4) to get all the categories for each community in the resulted dataset. Each of those are saved as a separated item in the dataset so you have to account for them when setting the maxItems input. When searching for Communities&Users, each community has different categories inside them (ie: New, Hot, Rising, etc.). If set to '0' all items will be scraped.Ī Javascript function passed as plain text that can return custom information. Limit of communities inside a leaderboard page that will be scraped.

The maximum number of "Communities & Users"'s pages that will be scraped if your seach or startUrl is a Communites&Users type. The maximum number of comments that will be scraped for each Comments Page. The maximum number of posts that will be scraped for each Posts Page or Communities&Users URL

If you are scrapping for Communities&Users, remember to consider that each category inside a community is saved as a separeted item. The maximum number of items that will be saved in the dataset. Sort search by Relevance, Hot, Top, New or Comments "Posts" or "Communities and users".įilter the search of posts by the last hour, day, week, month or year Select the type of search tha will be performed. This field should be empty when using startUrls. Each item on the array will perform a different search. List of Request objects that will be deeply crawled.Īn array containing keywords that will be used in the Reddit's search engine. It is build on top of Apify SDK and you can run it both on Apify platform and locally. It allows you to extract posts and comments together with some user info without login. Reddit Scraper is an Apify actor for extracting data from Reddit.

0 kommentar(er)

0 kommentar(er)